Download as PDF

Download as PDF

On this page

-

Introduction

-

When to evaluate and when to engage an independent evaluator

-

Evaluation principles

-

Timeliness

-

Appropriateness

-

Stakeholder involvement

-

Effective governance

-

Methodological rigour

-

Consideration of specific populations

-

Ethical conduct

-

Program logic

-

Developing an evaluation plan

-

Overview of the program

-

Purpose of the evaluation

-

Audience for the evaluation

-

Evaluation questions

-

Evaluation design and data sources

-

Potential risks

-

Resources and roles

-

Governance

-

Reporting

-

Procurement

-

Preparing the request for proposal

-

Engaging an evaluator

-

Managing the development and implementation of the evaluation workplan

-

Disseminating and using evaluation findings

-

References

Introduction

NSW Health is committed to the development of evidence-based policies and programs and the ongoing monitoring, review and evaluation of existing programs in line with NSW Government requirements. The NSW Treasury Policy and Guidelines: Evaluation (TPG22-22)1 sets out mandatory requirements, recommendations and guidance for NSW General Government Sector agencies and other government entities to plan for and conduct the evaluation of policies, projects, regulations and programs. This guide aims to support NSW Health staff in planning and managing program* evaluations.

Evaluation can be defined as a rigorous, systematic and objective process to assess a program’s effectiveness, efficiency and appropriateness.1 Evaluations are commonly undertaken to measure the outcomes of a program, and to reflect on its processes. Evaluation is considered to be distinct from ‘pure’ research. Both processes involve the rigorous gathering of evidence using robust and fit-for-purpose study designs and methods. However, research is typically guided by different sorts of questions than evaluations, with the broad aim to generate new knowledge, and research findings tend to be published in peer-reviewed journals. Evaluations are guided by ‘key evaluation questions’, with the aim to inform decision making around policies and programs. Involving key stakeholders in the design of evaluations and reporting findings back to stakeholders are also distinctive elements of evaluation.

Evaluation is also distinct from more operational assessments of programs, such as program monitoring. Monitoring is a continuous and systematic process of collecting and analysing information about the performance of a program.1 Monitoring can help to inform ongoing improvement and identify trends, issues or gaps for further examination through evaluation. Although program monitoring and evaluation are unique activities, in practice it is best to take an integrated approach and work towards developing a program monitoring and evaluation framework in the initiative design phase.

The Treasury Evaluation Policy and Guidelines outline the requirements for suitable evaluation of NSW public programs to assess their effectiveness, efficiency, value and continued relevance, and to improve transparency. The online NSW Treasury evaluation

workbooks and resources support the implementation of the Evaluation Guidelines and contain information to support monitoring and evaluation including templates for program logic models, data matrices, project management, and reporting. The NSW Health Guide to Measuring Value provides specific guidance about measuring improvements across the quadruple aim of value-based healthcare at NSW Health as part of monitoring and evaluation.2

This guide to planning and managing program evaluations complements the NSW Treasury evaluation workbooks and resources. It promotes a proactive, planned and structured approach to planning and managing evaluations, including information on when and how to evaluate a program and how to make the most of the results. The guide draws on the principles and processes described in the Treasury Evaluation Policy and Guidelines, but it is framed specifically in relation to the health context, and it outlines the steps that should be taken when engaging an independent evaluator. The guide may be used to assist NSW Health staff in developing a complete evaluation plan, or in drafting an evaluation plan to which an evaluator can add value. The principles and proposed steps are also relevant for policy staff undertaking evaluations of their own programs. It should be noted that, in the field of evaluation, several terms are defined and used in different ways in different disciplines or contexts (for example: goal/aim and impact/outcome/benefits). This guide uses health-relevant language.

* In this guide the word ‘program’ is used interchangeably with ‘initiative’. The NSW Treasury Policy and Guidelines: Evaluation (TPG22-22) define an initiative as a program, policy, strategy, service, project, or any series of related events. Initiatives can vary in size and structure; from a small initiative at a single location, a series of related events delivered over a period, or whole-of-government reforms with many components delivered by different agencies or governments.

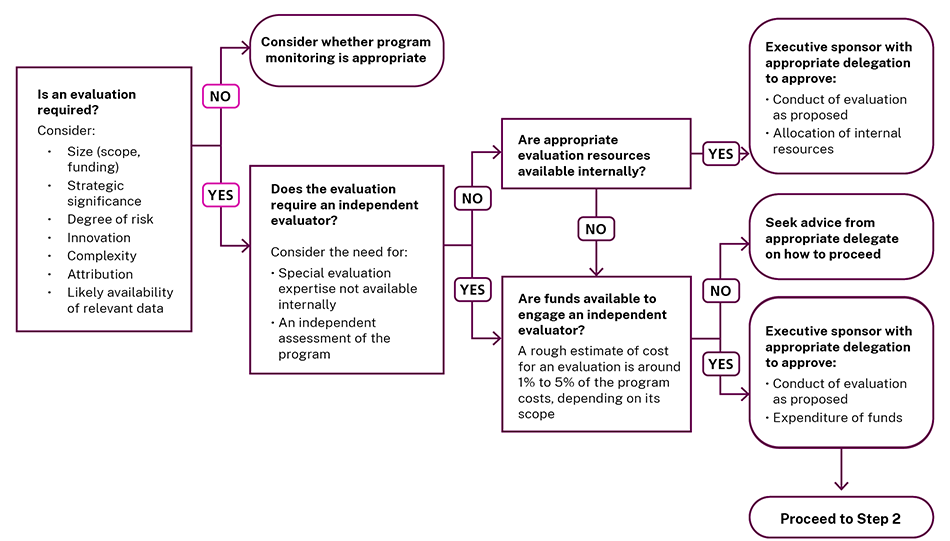

When to evaluate and when to engage an independent evaluator

Whether or not a program should be formally evaluated will depend on factors such as the size of the program (including its scope and level of funding), its strategic significance, and the degree of risk.1

Other important considerations include the program’s level of innovation and degree of complexity, and the extent to which any observed impacts will be able to be attributed to the program being evaluated, rather than to other external factors.

In some cases only certain components of a program will need to be evaluated, such as when a new implementation arm has been added to a program.

While some small-scale evaluations may be completed in-house, others will require engagement of an independent evaluator. An independent evaluator may be an individual or group external to the policy team managing the program or, for high priority/high risk programs, external to the program delivery agency. Engaging an independent evaluator is important where there is a need for special evaluation expertise and/or where independence needs to be demonstrated.3 An independent evaluator is likely to be particularly important for programs that have involved a reasonable investment, and those being assessed for continuation, modification, or scaling up.†

Whether evaluations are completed in-house or by an independent evaluator, the active engagement of NSW Health staff who are overseeing the program remains important.

Figure 1 depicts Step 1 when planning an evaluation – a process for conducting a pre-evaluation assessment to determine whether a program should be evaluated and, if so, whether an independent evaluator should be used.

Figure 1. Step 1: Pre-evaluation assessment.

Text alternative available.

Figure 1. Step 1: Pre-evaluation assessment.

Text alternative available.

† Scaling up refers to deliberate efforts to increase the impact of successfully tested health interventions so as to benefit more people and to foster policy and program development on a lasting basis. For more information and a step-by-step process for scaling up interventions, refer to Increasing the Scale of Population Health Interventions: A Guide.4

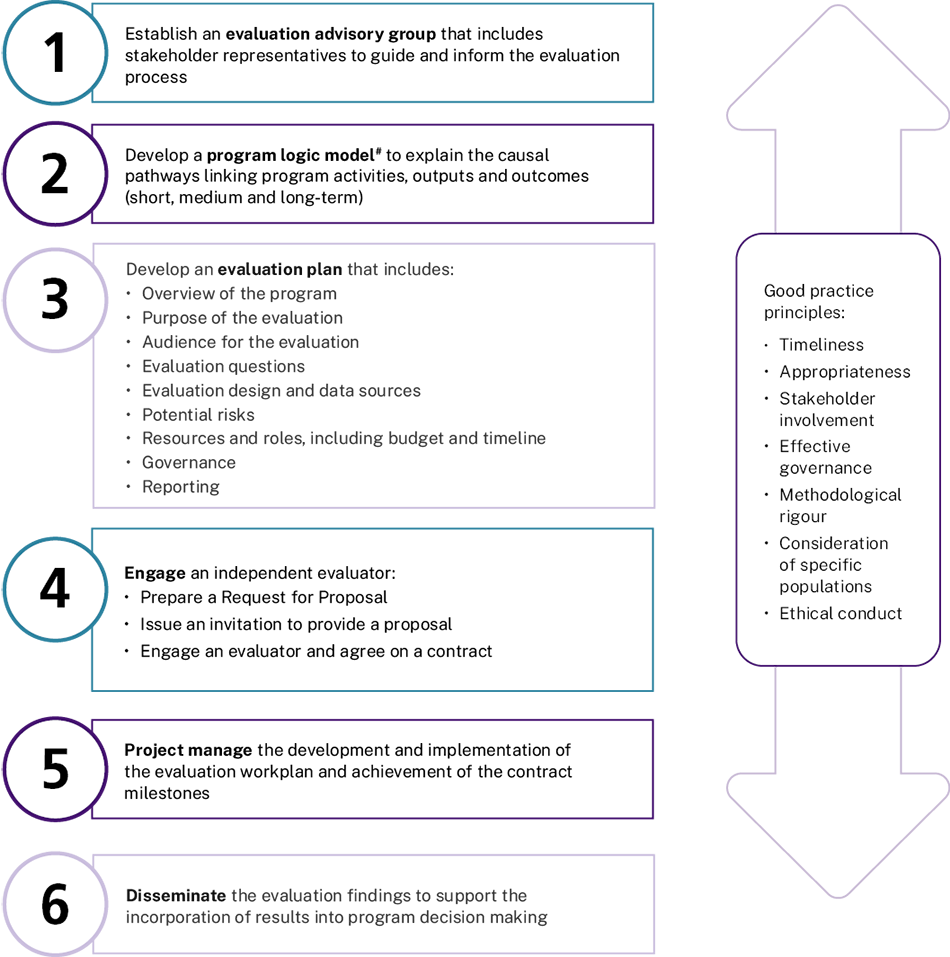

Figure 2 summarises Step 2, a process for planning a program evaluation where an executive sponsor with appropriate delegation has approved the engagement of an independent evaluator. The elements included in Figure 2 are explained in Sections 3 to 8 of this guide.

Figure 2. Step 2: Planning and managing a population health program evaluation.

Text alternative available.

Figure 2. Step 2: Planning and managing a population health program evaluation.

Text alternative available.

# Ideally a program logic model should be developed in the program planning phase. For more information about the development of program logic models and their use in planning program evaluations, refer to Developing and Using Program Logic: A Guide.5

Evaluation principles

Best practice principles that underpin the conduct of effective evaluations should be incorporated where appropriate when planning and conducting an evaluation.1 Considerations relevant to population health program evaluations include timeliness, appropriateness, stakeholder involvement, effective governance, methodological rigour, consideration of specific populations, and ethical conduct.

Timeliness

Evaluation planning should commence as early as possible during the program planning phase.6 Incorporating evaluation planning into the broader process of program planning will help to ensure that the program has clear aims‡ and objectives, a strong rationale, and can be properly evaluated. Planning an evaluation early also ensures that a robust evaluation can be built into the design of the program. This includes, for example, trialling and implementing data collection tools, modifying existing data collection instruments, providing appropriate training for staff responsible for collecting data, and collecting baseline data before program implementation, if relevant. In some cases, evaluation requirements may influence the way a program is rolled out across implementation sites. Although not ideal, an evaluation can still be developed after the program has commenced.

Evaluations should conclude before decisions about the program need to be made. To that end, consideration should be given to the realistic amount of time needed to conduct an evaluation to ensure findings will be available when needed to support decision making.1 This is particularly relevant to outcome evaluations where the generation of measurable results may take some time.

‡ Program ‘aims’ may also be referred to as ‘goals’. In this guide, the term ‘aims’ will be used.

Appropriateness

The scope of an evaluation should be realistic and appropriate with respect to the size, stage and characteristics of the program being evaluated, the available evaluation budget, and practical issues such as availability of data.3 Scope refers to the boundaries around what an evaluation will and will not cover.7 The scope may define, for example, the specific programs (or aspects of these) to be evaluated, the time period or implementation phase to be covered, the geographical coverage, and the target groups to be included.

The design of and approach to an evaluation should be fit for purpose. For example, it is not necessary to use a complex experimental design when a simple one will suffice, and methods for collecting data should be feasible within the time and resources available.8 Focusing on the most relevant evaluation questions will help to ensure that evaluations are manageable, cost efficient and useful (see section on evaluation questions).7

Stakeholder involvement

Stakeholders are people or organisations that have an investment in the conduct of the evaluation and its findings. Stakeholders can include the primary intended users of the evaluation, such as program decision makers or program and policy staff, as well as people affected by the program being evaluated, such as community members or organisations.

Evaluations should foster input and participation among stakeholders throughout the process to enable their contribution to planning and conducting the evaluation as well as interpreting and disseminating the findings. A review of NSW Health-funded population health intervention research projects demonstrated that involving end users of research from the inception of projects increased the likelihood of findings influencing policy.9

See Table 5 in the Treasury Evaluation Policy and Guidelines for additional information on stakeholders and their potential roles in an evaluation.1

Effective governance

An evaluation advisory group should be established to guide and inform the evaluation process. Depending on the scope of the evaluation, this group may include representatives from the Ministry of Health, non-government organisations, local health districts (LHDs) or industry bodies, along with consumers of the program, academics or individuals with evaluation skills and expertise. If a steering committee already exists for the overall program, this committee or a sub-group of its members may also take the role of the evaluation advisory group.

Where the program being evaluated affects the health or wellbeing of Aboriginal peoples or communities, the group should include Aboriginal representation (e.g. from the Aboriginal Health & Medical Research Council, an Aboriginal Community Controlled Health Service, or the community).

The evaluation advisory group should agree to terms of reference that set out its purpose and working arrangements, including members’ roles and responsibilities (see also section on governance). As the group may be provided with access to confidential information during the evaluation process, its members should also be requested to agree to a confidentiality undertaking, on appointment, to ensure that any information provided to them is kept confidential.

Methodological rigour

Evaluations should use appropriate methods and draw on relevant data that are valid and reliable. The methods for data collection and analysis should be appropriate to the purpose and scope of the evaluation (see section on evaluation design and data sources). A quantitative, qualitative, or mixed approach may be most suitable. For evaluations that aim to assess the outcomes of a program, approaches to attributing any changes to the program being evaluated (as opposed to other programs or activities and other environmental factors) are particularly important. In real world evaluations, as compared to ‘pure research’ studies, it can sometimes be difficult or not possible to implement studies of enough scientific rigour to make definitive claims regarding attribution – for example, where a program is already being implemented at scale and an appropriate comparison group cannot be identified. However, planning early will tend to increase the methodological options available. The NSW Treasury resource

Outcome Evaluation Design Technical Note outlines approaches to investigating a program’s contribution to observed outcomes, sometimes referred to as ‘plausible contributions’.

Consideration of specific populations

The needs of specific populations, including Aboriginal peoples, should be considered in every stage of evaluation planning and implementation. Considerations for specific populations should include:

- the health context and health needs of specific populations who may be impacted by the evaluation

- engagement with specific populations throughout the design, development, implementation and dissemination of findings from the evaluation

- potential impacts of the evaluation on specific populations, including positive and negative impacts, and intended and unintended consequences.

Where a project affects Aboriginal peoples and communities, evaluation methods should be culturally appropriate and sensitive. Consider procuring evaluation services from Aboriginal providers to design and conduct the evaluation or contribute at key points (see Section 5.V in the Treasury Evaluation Policy and Guidelines).1 If the evaluation is being conducted as part of a larger study or project, cultural engagement should be built into the larger project at its outset.

Ethical conduct

The evaluation must be conducted in an ethical manner. This includes consideration of relevant legislative requirements, particularly regarding the privacy of participants and the costs and benefits to individuals, the community or population involved.

The National Health and Medical Research Council (NHMRC) document Ethical Considerations in Quality Assurance and Evaluation Activities provides guidance on relevant ethical issues and assists in identifying triggers for the consideration of ethical review.10 In addition, the NSW Health Guideline GL2007_020 Human Research Ethics Committees: Quality Improvement & Ethical Review: A Practice Guide for NSW provides a checklist to assist in identifying potential ethical risks. If the evaluation is determined to involve more than a low level of risk, full review by a human research ethics committee (HREC) is required.11 A

list of NSW Health HRECs is available online. NSW Health HRECs provide an expedited review process for certain research projects that are considered to involve low or negligible risk to participants.12 Research or evaluation projects that have specific review requirements are outlined below.

The process of applying for, and obtaining, HREC approval can take some time and this should be factored into evaluation planning. Pre-submission conversations with an HREC officer can help in preparing a successful application and avoid unnecessary delays.

Where an evaluation is deemed to not require ethical review by an HREC, it is recommended that program staff prepare a statement affirming that an alternative approach to ethical review was considered to be appropriate, outlining the reasons for this decision.

Special ethical review requirements

- Population health research or evaluation projects utilising and/or linking routinely collected health (and other) data, including data collections owned or managed by the NSW Ministry of Health or the Cancer Institute NSW. Resources:

How to apply to the NSW Population and Health Services Research Ethics Committee (PHSREC). HREC: NSW Population and Health Services Research Ethics Committee.

- Research or evaluations affecting the health and wellbeing of Aboriginal people and communities in NSW. Resources: Ethical conduct in research with Aboriginal and Torres Strait Islander Peoples and communities: Guidelines for researchers and stakeholders,13 Keeping research on track II: A companion document to Ethical conduct in research with Aboriginal and Torres Strait Islander Peoples and communities: Guidelines for researchers and stakeholders,14 AH&MRC Ethical Guidelines: Key Principles.15 HREC: Aboriginal Health & Medical Research Council of NSW (AH&MRC) Ethics Committee.

- Research or evaluations involving persons in custody and/or staff of Justice Health NSW. Resources:

Getting ethics approval from the Justice Health NSW Human Research Ethics Committee. HREC: Justice Health NSW Human Research Ethics Committee AH&MRC Ethics Committee.*

∆ See also PD2010_055 Research–Ethical & Scientific Review of Human Research in NSW Public Health Organisations.

* The Justice Health NSW HREC considers that all research involving people in custody in NSW will involve at least some Aboriginal peoples and will require review and approval by the AH&MRC Ethics Committee. AH&MRC Ethics Committee approval can be sought concurrently with Justice Health NSW HREC approval.

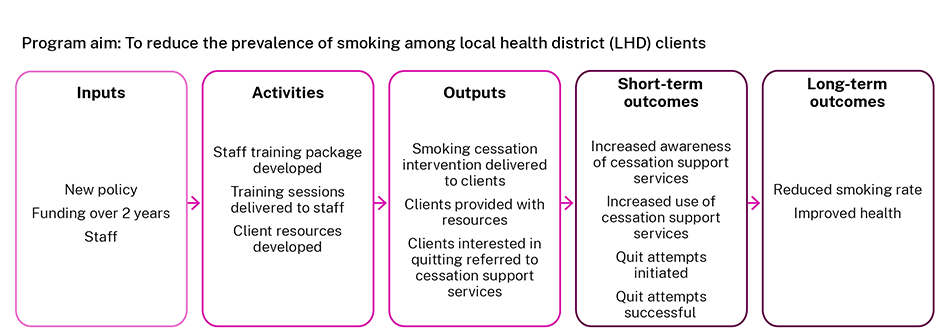

Program logic

Developing a program logic model is an important early step in designing a program and planning a program evaluation.

A program logic model is a schematic representation that describes how a program is intended to work by linking activities with outputs and with short, medium and longer-term outcomes. Program logic aims to show the intended causal links for a program. Wherever possible, these causal links should be evidence based.

A program logic model can assist in planning an evaluation by helping to:16,17

- determine what to evaluate

- identify key evaluation questions

- identify information needed to answer evaluation questions

- decide when to collect data

- provide a mechanism for ensuring acceptability among stakeholders.

A variety of methods are used to develop program logic models. One approach, known as ‘backcasting’, involves identifying the possible outcomes of the program, arranging them in a chain from short-term to long-term outcomes, and subsequently working backwards to identify the program outputs and activities required to achieve these outcomes. The outcomes defined through this process should correspond to the program aims and objectives respectively, as depicted below.

The process of developing a program logic model should be consultative and include consideration of available information about the program, the advice of program and evaluation stakeholders, as well as the insights of the team implementing the program and people affected by the program. The final model should be coherent, logical and clear so it can illustrate the program for both technical and nontechnical audiences.16

An example of a simple program logic model is presented in Figure 3. For more information and a step-by-step process for constructing a program logic model, refer to Developing and Using Program Logic: A Guide.5

Relationship between program components, program logic model, and evaluation plan18

- Program aims (program component) correspond to long-term program outcomes (program logic model) measured by outcome evaluation (evaluation plan)

- Program objectives (program component) correspond to short- to medium-term outcomes (program logic model) measured by outcome evaluation (evaluation plan)

- Program strategies/activities (program component) correspond to inputs, activities, outputs (program logic model) measured by process evaluation (evaluation plan)

Figure 3. Example of a program logic model.

Text alternative available.

Figure 3. Example of a program logic model.

Text alternative available.

Developing an evaluation plan

The evaluation plan is a document that sets out what is being evaluated, why the evaluation is being undertaken, how the evaluation should be conducted, and how the findings will be used.

An evaluation plan that is agreed in consultation with stakeholders can help ensure a clear, shared understanding of the purpose of an evaluation and its process. For evaluations where an independent evaluator is engaged, elements of the evaluation plan will form the basis for a request for proposal (RFP) document (see section on preparing an RFP) and a contract with the successful evaluator.

Note that all of the information required for a comprehensive evaluation plan may not be known when preparing the RFP, and the evaluator may help further develop or refine the plan. However, the clearer and more comprehensive the information supplied in the RFP, the more likely prospective evaluators will be able to provide a considered proposal. The evaluation plan should be developed with reference to the components of the program and the program logic model; these inform the evaluation plan by identifying aspects of the program that could be assessed using process and outcome measures, as outlined in Table 2.

The specific content and format of an evaluation plan will vary according to the program to be evaluated. It is suggested that, for population health programs, the following elements at least are included:

- overview of the program

- purpose of the evaluation

- audience for the evaluation

- evaluation questions

- evaluation design and data sources

- potential risks

- resources and roles

- governance

- reporting.

Proposed inclusions in each section of the evaluation plan are summarised as follows.

Overview of the program

This section should include a brief overview of the broad aims and specific objectives of the program. The program objectives should be SMART:19

- Specific: clear and precise, including the population group and setting of the program

- Measurable: can be assessed using existing or potential data collection methods

- Achievable: reasonable and likely to be achieved within the timeframe

- Relevant: likely to be achieved given the activities employed, and appropriate for realising the aims

- Time specific: having a timeframe for meeting the objective.

This section should also outline the program’s development history, its strategies and/or activities, key stakeholders, and the context in which it is being developed and implemented. The program logic model should be included.

Purpose of the evaluation

The fundamental reason for conducting the evaluation should be clearly stated. In articulating the purpose of the evaluation, it is important to consider the decisions that will be made as a result of the findings (such as program adjustments to enhance efficiency, justification of investment to program funders, scaling up of a program) and when these decisions will be made. For example, the purpose of an evaluation may be to inform decisions about developing, improving, continuing, stopping, reducing or expanding a program.

Audience for the evaluation

A related consideration is the primary audience for the evaluation: the people or groups that will use the information produced by the evaluation. These may include decision makers, program implementation staff, organisations running similar programs in other jurisdictions or countries, and consumers. The primary users should be specified in this section of the evaluation plan.

Evaluation questions

Evaluation questions serve to focus an evaluation and provide direction for the collection and analysis of data.3 Evaluation questions should be based on the most important aspects of the program to be examined. The program logic model can help in identifying these. For example, the program logic can help to convert general questions about the effectiveness of a program into specific questions that relate to particular outcomes in the causal pathway, and questions about the factors most likely to affect those outcomes.16

The number of evaluation questions agreed upon should be manageable in relation to the time and resources available. It is important to think strategically when determining what information is needed most so that the evaluation questions can be prioritised and the most critical questions identified.7

Different types of evaluation require different sorts of evaluation questions.

Types of evaluation and typical evaluation questions18

Process evaluation

Focus

- Investigates how the program is delivered: activities of the program, program quality, and who it is reaching

- Can identify failures of implementation, as distinct from program ineffectiveness

Typical questions

- How is the program being implemented?

- Is the program being implemented as planned?

- Is the program reaching the target group?

- Are participants satisfied with the program?

Outcome evaluation

Focus

- Measures the immediate effects of the program (does it meet its objectives?) and the longer-term effects of the program (does it meet its aims?)

- Can identify unintended effects

Typical questions

- Did the program produce the intended effects in the short, medium or long term?

- For whom, in what ways and in what circumstances?

- What unintended effects (posititive and negative) were produced?

- To what extent can changes be attributed to the program?

- What were the particular features of the program and context that made a difference?

- What was the influence of other factors?

Economic evaluation

Focus

- Considers efficiency by standardising outcomes, often in terms of dollar value

- Answers questions of value for money, cost-effectiveness or cost-benefit

Typical questions

- Was the intervention cost-effective (compared to alternatives)?

- What was the ratio of costs to benefits?

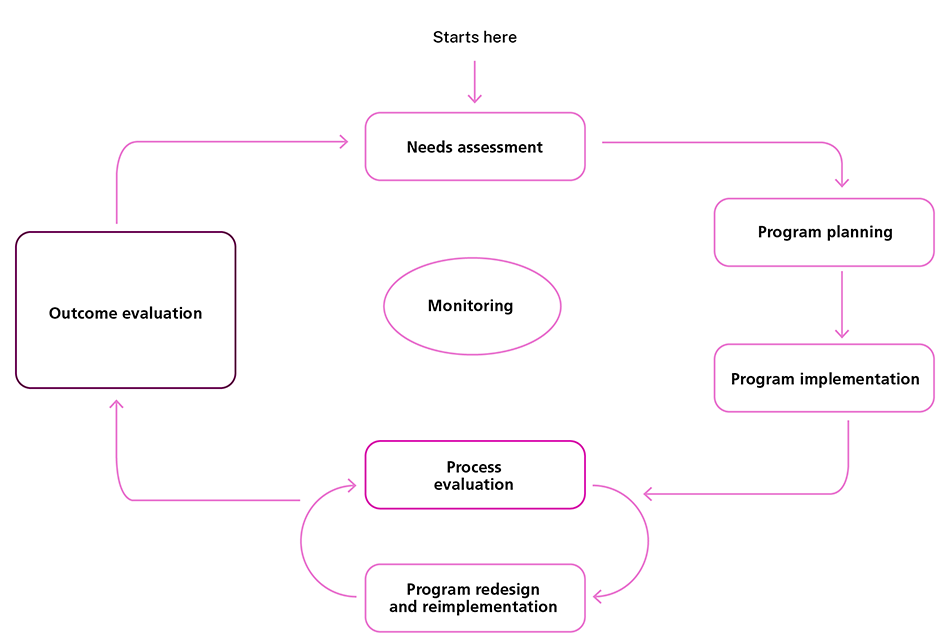

Depending on its purpose and scope, the evaluation may include process, outcome or economic measures≈ or a combination of these. For example, while an innovative program (such as the pilot of an intervention) may require an outcome evaluation to determine whether the program was effective, rollout of an existing successful program may only require a process evaluation to monitor its implementation.6 Figure 4 illustrates where different types of evaluation are likely to fit in the planning and evaluation cycle. Note that an assessment of the outcomes of a program should be made only after it has been determined that the program is being implemented as planned and appropriate approval (per delegations) for outcome evaluation has been obtained. Consideration should be given to the likely time required for program redesign (where relevant) and the expected time lag until outcomes are realised.

For each evaluation question, one or more indicators should be identified that define how change or progress in relation to the question will be assessed (for example, ‘number of clients enrolled’, ‘client satisfaction with program’, ‘change in vegetable intake’, ‘changes in waist circumference’). The indicators should meet the SMART criteria (specific, measurable, achievable, relevant, time specific).

Figure 4. Planning and evaluation cycle17.

Text alternative available.

Figure 4. Planning and evaluation cycle17.

Text alternative available.

≈ For more information about when to commission an economic evaluation and an overview of economic evaluation techniques, refer to Engaging an Independent Evaluator for Economic Evaluations: A Guide.20

Evaluation design and data sources

The design of a program evaluation sets out the combination of research methods that will be used to provide evidence for key evaluation questions. The design informs the data needed for the evaluation, when and how the data will be collected, the data collection instruments to be used, and how the data will be analysed and interpreted. More detailed information about quantitative study designs used in outcome evaluations is provided in Study Design for Evaluating Population Health and Health Service Interventions: A Guide.21 Data may be collected using quantitative, qualitative or mixed methods; the NSW Treasury resource

Evidence in Evaluation technical note describes each of these.

Data that will provide the information required for each indicator in order to answer the evaluation questions should be identified and documented. Data sources may include both existing data (e.g. routinely collected administrative data, medical records) and data that will have to be generated for the evaluation (e.g. survey of staff, interviews with program participants). For new data, consideration should be given to data collection methods, when data should be collected, who will be responsible for data collection, and who will be the data custodian (i.e. who has administrative control over the data).

Details about data required for an evaluation are often presented alongside relevant evaluation questions and indicators in a table (or data matrix). An example is included below.

It may be useful to seek advice from data, research or evaluation specialists when considering possible evaluation designs and data sources. Alternatively, potential independent evaluators may be asked to propose a design or enhance an initial idea for a design as part of their response to a request for proposal.

Example of an evaluation data matrix

Evaluation question

Did the program result in increased quit attempts among smokers?

Indicator

- Number of quit attempts initiated in previous 3 months among LHD clients who were smokers

- Number of successful quit attempts in previous 3 months among LHD clients who were smokers

Data source

Client survey

Timeframe

Baseline, then 3, 6 and 12-months post-intervention

Responsibility

LHD staff

Potential risks

Potential risks to the evaluation and possible mitigation strategies should be identified early in the evaluation planning process.

Potential risks to the evaluation may include, for example, inability to recruit participants or low response rates; evaluation findings that are inconclusive; or difficulty in determining the extent to which the changes observed are attributable to the program. Potential independent evaluators may be asked to determine possible risks and strategies for managing them as part of their response to a request for proposal.

A matrix to analyse the likelihood and consequences of any risks, and strategies for their management, is presented below. The NSW Health policy directive PD2022_023

Enterprise-Wide Risk Management Policy includes further information and tools.

While the risk management matrix and policy directive relate primarily to program management and corporate governance, the principles are also relevant to program evaluation.

Risk management matrix

Risk source

List risks here

Likelihood

- Rare

- Unlikely

- Possble

- Likely

- Almost certain

Consequence

- Minimal

- Minor

- Moderate

- Major

- Catastrophic

Risk rating

Action to manage risk

List action to manage risks here

Resources and roles

The human, financial and other resources available for the evaluation should be documented. This includes both internal resources for planning, procurement and project management, and a budget for engaging an independent evaluator. Financial resourcing for an evaluation will need to be considered at an early stage to ensure funding is approved and allocated in the program budget. A rough estimate of cost for an evaluation is 1% to 5% of the program costs;1 however, the actual cost will be informed by the type and breadth of evaluative work to be undertaken.

The roles of Ministry staff, stakeholders and the evaluator should also be clearly documented. The timeframe for the evaluation should be linked to the stated roles and resources; this should take into account any key milestones (e.g. decision points).

Governance

As noted previously, an evaluation advisory group should be established to guide the planning and conduct of the evaluation. The roles and responsibilities of this group should be clearly stated in its terms of reference and outlined in this section of the evaluation plan.

Reporting

A plan for how the results of the evaluation will be reported and disseminated should be agreed at an early stage. The dissemination plan should consider the range of target audiences for the evaluation findings (e.g. program decision makers, community members), their specific information needs, and appropriate reporting formats for each audience (e.g. written or oral, printed or electronic).

Note that the public release of evaluation findings is recommended to foster accountability and transparency, contribute to the evidence base, and reduce duplication and overlap.1

Timeliness of reporting should also be considered; for example, staged reporting during the course of an evaluation can help to ensure that information is available at crucial decision making points.3

Preparation of a detailed evaluation report that describes the program and the evaluation design, activities and results in full is important to enable replication or wider implementation of the program.22 In addition, more targeted reporting strategies should be considered as part of dissemination planning. These may include, for example, stakeholder newsletters, brief plain language reports, or presentations to decision makers or at conferences, workshops and other forums.

If appropriate, evaluation results may also be published in a peer-reviewed journal. If it is proposed to publish a journal paper, the evaluation advisory group should pre-plan the procedures for writing and authorship; review of the evaluation by an HREC should also be considered at an early stage, as some journals require ethics approval. Consideration should be given to publication in an open access journal to enhance the potential reach of the results.

Procurement

While small-scale evaluations may be completed inhouse, evaluations of programs involving a reasonable investment, and those being reviewed for continuation or expansion, may require procurement of an independent evaluator.

NSW Health requirements for the procurement of goods and services, including engagement of consultants, are outlined in the policy directive PD2023_028

NSW Health Procurement (Goods and Services) Policy. The NSW Health Procurement Portal provides a step-by-step overview of the procurement process and includes links to a range of tools, templates and other resources to support procurement.

The approvals required for the procurement process should be determined, noting that the level of approval will depend on the estimated cost of the consultancy as per the Delegations Manual.23 All of the necessary approvals (e.g. funding approval by an appropriately delegated officer, approval to issue a tender) should be obtained prior to commencing procurement.

The specifications of the project should be developed and documented in a request for proposal concurrently with a plan for assessing responses. This plan should include assessment criteria and weightings and should identify who will be part of the assessment panel.

Preparing the request for proposal

The process for engaging an independent evaluator will require preparation of a request for proposal (RFP). The RFP document outlines the specifications of the evaluation project and should be developed with reference to the parts of the evaluation plan that have been agreed with program stakeholders. An RFP template is available from the Ministry of Health Procurement Portal.

The RFP should be clear and comprehensive. The more information that can be provided, the greater the likelihood that potential evaluators will understand what is required of them and prepare a considered and appropriate response. Table 6 in the Treasury Evaluation Policy and Guidelines has additional information with examples to assist in ensuring potential evaluators can effectively design and cost their proposal.1 Consider the following when preparing an RFP document:

When describing the program to be evaluated:

- Include a comprehensive overview of the key features of the program, including:

- the aims and objectives of the program

- its development and implementation history, including any previous or concurrent evaluations, and current stage of development or implementation of the program

- components and/or activities of the program, its scale (e.g. LHD-specific, statewide), and who is delivering the program

- governance and key stakeholders

- the context in which the program is being developed and/or implemented

- Include the program logic model, if one exists

- Ensure any technical terms are defined

- Ensure key terms are used accurately and consistently (e.g. cost benefit versus cost effectiveness).20

When describing the evaluation and specifying the work to be undertaken by the evaluator:

- Ensure that the purpose of the evaluation is expressed in a way that will not compromise the objectivity of the evaluator. The purpose should be couched in neutral terms (e.g. “to inform decisions about scaling up the program” rather than “to justify plans to scale up the program”)

- Specify any evaluation questions, indicators and data sources that have already been agreed. If appropriate, include a draft evaluation plan

- Clearly delineate which tasks are within scope for the evaluator and those that are out of scope

- Describe in detail the data that will be available for use by the evaluator, how the evaluator will be given access to the data, and any conditions on its use. Include as much information about these data sources as possible (e.g data collection methods, size of dataset, relevant variables, any limitations of the data, custodianship, confidentiality)

- Ensure that timeframes for deliverables are realistic and achievable. In determining timeframes, consider the size and complexity of tasks to be undertaken by the evaluator, any key decision points for which results will be required, and any mitigating factors that could impact on the completion of tasks (e.g. end of year)

- It is recommended that an indicative budget is specified. The budget should be estimated based on the tasks expected of the evaluator and the funds available

- Clearly outline the format in which evaluation findings should be reported by the evaluator. In particular, it is important to consider whether reports should include only results from the evaluation or also an interpretation and/or recommendations. Whether or not recommendations should be included will depend on the program, the purpose of the evaluation and the stakeholders involved.

When listing criteria for assessing applications:

It is suggested that the criteria listed in

Potential criteria for assessing applications be considered.

Depending on whether the evaluator was asked to identify potential risks, and consider appropriate mitigation strategies, it may be desirable to include a relevant assessment criterion (e.g. “Demonstrated experience and expertise in risk identification and mitigation related to evaluations and appropriateness of the risk mitigation strategy for this evaluation project”).

Engaging an evaluator

Responses to the RFP should be assessed in accordance with the agreed plan. A report and recommendation should be prepared and approval for the recommendation obtained as per delegations.

Once an evaluator has been selected a contract will need to be signed. Advice on selecting, developing and maintaining contracts is available from the Legal and Regulatory Services intranet site or the NSW Health Procurement Portal.

For projects with a value of $150,000 (GST inclusive) or more, it is a requirement under the Government Information (Public Access) Act 2009 (GIPA Act) that contract information is disclosed on the NSW Government tenders website; see PD2018_021 Disclosure of Contract Information. The GIPA Disclosure Form is available from the NSW Health Procurement Portal.

Potential criteria for assessing applications

- Demonstrated experience on evaluation projects of comparable scale and complexity, and/or with specific techniques or approaches (e.g. "Significant relevant evaluation experience and capability to deliver the full scope of the project requirements including the experience of the designated staff in undertaking similar evaluations”, "Demonstrated experience with both quantitative and qualitative evaluation methods and in producing high-quality evaluation reports”)

- Demonstrated experience on projects in relevant sectors or settings (e.g. “Demonstrated experience in working in the general practice setting”, “Demonstrated understanding of family violence and the associated issues”)

- Quality, feasibility and appropriateness of the proposal for conducting the evaluation (e.g. “Quality and relevance of the proposal for achieving the required evaluation services and deliverables as identified in this RFP”, “Feasibility, appropriateness and scientific rigour of the proposed work plan and methodology for achieving the required Services and Deliverables”)

- Feasibility and value for money of proposed fee structure (e.g. “Proposed fee structure is feasible and represents value for money”).

Managing the development and implementation of the evaluation workplan

Planning an evaluation requires project management skills including the development of a workplan with clear timeframes and deliverables. An independent evaluator will usually develop a draft workplan as part of their response to the RFP which can be refined with the evaluation advisory group after they are contracted.

Plan to establish an effective evaluation governance structure with clear terms of reference from the outset.

The active involvement of NSW Health staff throughout the evaluation is important for successful project management. Regular scheduled updates and meetings with the evaluator throughout the implementation of the evaluation will help communication and facilitate a shared understanding of the evaluation needs and the management of any problems that may arise. Consider using structured project management methods or systems to keep the evaluation on track.

A successful RFP process will identify an evaluator who has the skills and experiences to rigorously collect, analyse and report the data. The contract with the evaluator will include requirements for the provision of a draft report or reports for comment, as well as the writing of a final report incorporating feedback. The Ministry’s role in reviewing the draft report is not to veto the results but to comment on structure, accuracy and whether it has answered the evaluation questions.

Disseminating and using evaluation findings

The fundamental reason for conducting an evaluation is to inform health policy and program decisions for the benefit of the NSW public.

Factors that support the incorporation of results into program decision making include:

- the engagement of end-users of the evaluation findings through the program planning and evaluation cycle

- active dissemination strategies (not limited to publications in academic journals or presentations at academic conferences)

- the tailored communication of results and recommendations to decision makers

- an organisational culture supportive of the understanding and use of evidence.9,24,25

Before dissemination, the final evaluation report will need to be approved for release by the appropriate Ministry delegate. Once approved for release, communicating the completed evaluation results is important to inform the development of the program as well as future population health programs. It is good practice to make results available to any stakeholders who have had input into the evaluation.

It is best to plan early for how the results of the evaluation will be reported and communicated (see section on reporting).

Dissemination of evaluation findings may take a number of approaches:

- evaluators provide a feedback session to stakeholders

- electronic newsletters tailored to stakeholders

- results reported to relevant Ministry committees and management structures

- placing the final report online

- conference papers

- peer review publication of results

- if suitable, communication to the media, with the involvement of the Ministry’s Public Affairs Unit.

Crucially, the results and/or recommendations from the evaluation report will need to be reviewed and responded to by the policy branch responsible for the program, and an implementation plan or policy brief developed.

References

- NSW Treasury.

NSW Treasury Policy and Guidelines: Evaluation (TPG22-22). Sydney: NSW Treasury; 2023.

- NSW Health. NSW Health Guide to Measuring Value. Sydney: NSW Ministry of Health; 2023.

- Owen JM. Program evaluation: forms and approaches. 3rd edition. New York: The Guilford Press; 2007.

- Centre for Epidemiology and Evidence.

Increasing the Scale of Population Health Interventions: A Guide. Evidence and Evaluation Guidance Series, Population and Public Health Division. Sydney: NSW Ministry of Health; 2023.

- Centre for Epidemiology and Evidence.

Developing and Using Program Logic: A Guide. Evidence and Evaluation Guidance Series, Population and Public Health Division. Sydney: NSW Ministry of Health; 2023.

- Bauman AE, Nutbeam D. Evaluation in a nutshell: a practical guide to the evaluation of health promotion programs. 2nd edition. North Ryde: McGraw Hill; 2014.

- United Nations Development Programme.

Handbook on Planning, Monitoring and Evaluating for Development Results. New York: UNDP; 2009.

- Robson C. Small-Scale Evaluation: Principles and Practice. London: Sage Publications Inc.; 2000.

- Milat AJ, Laws R, King L, Newson R, Rychetnik L, Rissel C, et al. Policy and practice impacts of applied research: a case study analysis of the New South Wales Health Promotion Demonstration Research Grants Scheme 2000–2006. Health Res Policy Sys 2013; 11: 5.

- National Health and Medical Research Council.

Ethical Considerations in Quality Assurance and Evaluation Activities. Canberra: NHMRC; 2014.

- National Health and Medical Research Council.

National Statement on ethical conduct in human research 2007 (updated 2018). Canberra: NHMRC; 2007.

- NSW Health.

Guidance Regarding Expedited Ethical and Scientific Review of Low and Negligible Risk Research: New South Wales. Sydney: NSW Health; 2023.

- National Health and Medical Research Council.

Ethical conduct in research with Aboriginal and Torres Strait Islander Peoples and communities: Guidelines for researchers and stakeholders. Canberra: Commonwealth of Australia; 2018.

- National Health and Medical Research Council.

Keeping research on track II: a companion document to Ethical conduct in research with Aboriginal and Torres Strait Islander Peoples and communities: Guidelines for researchers and stakeholders. Canberra: Commonwealth of Australia; 2018.

- AH&MRC Ethics Committee.

AH&MRC Ethical Guidelines: Key Principles V2.0. Sydney: AH&MRC; 2020.

- Funnell SC, Rogers PJ. Purposeful Program Theory: Effective Use of Theories of Change and Logic Models. Hoboken: Wiley; 2011.

- Holt L.

Understanding program logic. Victorian Government Department of Human Services; 2009.

- Hawe P, Degeling D, Hall J. Evaluating Health Promotion: A Health Worker’s Guide. Sydney: Maclennan & Petty; 1990.

- Round R, Marshall B, Horton K. Planning for effective health promotion evaluation. Melbourne: Victorian Government Department of Human Services; 2005.

- Centre for Epidemiology and Evidence.

Engaging an Independent Evaluator for Economic Evaluations: A Guide. Evidence and Evaluation Guidance Series, Population and Public Health Division. Sydney: NSW Ministry of Health; 2023.

- Centre for Epidemiology and Evidence.

Study Design for Evaluating Population Health and Health Service Interventions: A Guide. Evidence and Evaluation Guidance Series, Population and Public Health Division. Sydney: NSW Ministry of Health; 2023.

- Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M. Developing and evaluating complex interventions: the new Medical Research Council guidance. Br Med J 2008; 337: a1655.

- NSW Department of Health. Delegations Manual– Combined–Administrative, Financial, Staff. Sydney: NSW Department of Health; 1997.

- Moore G, Todd A, Redman S. Strategies to increase the use of evidence from research in population health policy and programs: a rapid review. Sydney: NSW Health; 2009. Available from:

www.health.nsw.gov. au/research/Documents/10-strategies-to-increase-research-use.pdf

- Moore G, Campbell D.

Increasing the use of research in policymaking. Sydney: NSW Health; 2017.